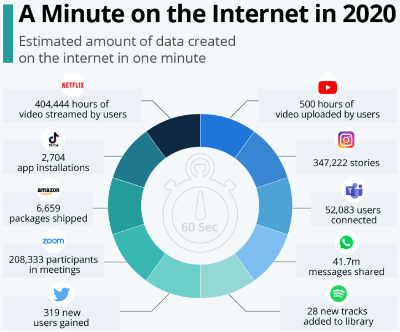

We are surrounded by data, from images captured on our mobile phones and videos streamed online to elaborate sensor fusion data required for autonomous driving. As shown in Figure 1, it is estimated that in 2020, 500 hours of video were uploaded to YouTube every minute and >400,000 hours of video were streamed every minute from Netflix alone.

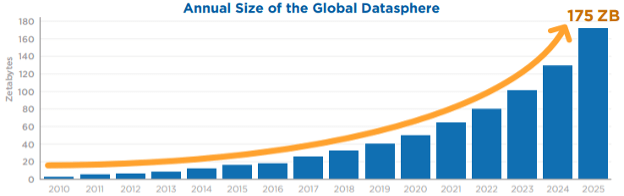

Every facet of our life, especially in this post COVID-19 world, from shopping online to interacting with friends and family over social media is made possible by data – and the amount of data we generate is only expected to increase dramatically. In fact, the total amount of data is expected to grow to 175 Zettabytes (1 ZB = 1 trillion gigabytes) by 2025, up from 33 ZB in 2018 (Figure 2).

Indeed, our world is going through a digital transformation with the prevalence of Big Data as we monitor and digitize everything and systematically extract information from raw data to gain valuable insights.

Big Data Lives in the Cloud

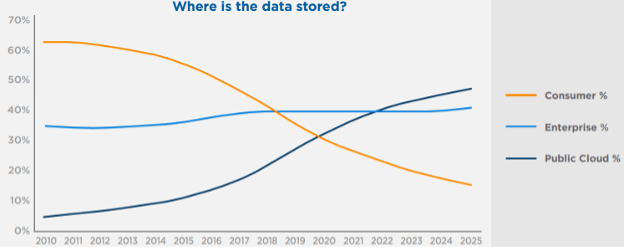

Along with explosion of data creation, there is an accompanying shift in how the data is stored and analyzed. While data creation continues to happen at the endpoints, edge, and increasingly in the cloud, a disproportionate amount of this data is stored in the cloud. As shown in Figure 3, IDC estimates that by 2025, nearly 50% of worldwide data will be stored in public clouds. Public clouds will also account for nearly 60% of worldwide server deployments, thereby providing necessary compute power to process all the data stored in the cloud. Cloud Service Providers (CSPs) operate large data centers that excel at storing and managing big data while providing storage and computing on-demand to end-users. Gartner estimates that by next year (2022), public clouds will be essential for 90% of data and analytics innovation.

AI/ML Complexity Doubles Every 4 Months

Artificial Intelligence (AI) workloads running in the cloud bring together data storage and data analytics to address business problems that would otherwise be impossible to tackle.

Gartner estimates that by 2025, 75% of enterprises will operationalize AI to provide insights and predictions in complex business situations. This proliferation of AI requires that the underlying models be quick, reliable, and accurate. Machine Learning (ML), especially Deep Learning (DL), combines AI algorithms, big training data, and purpose-built heterogeneous compute hardware to handle extremely large and constantly evolving datasets.

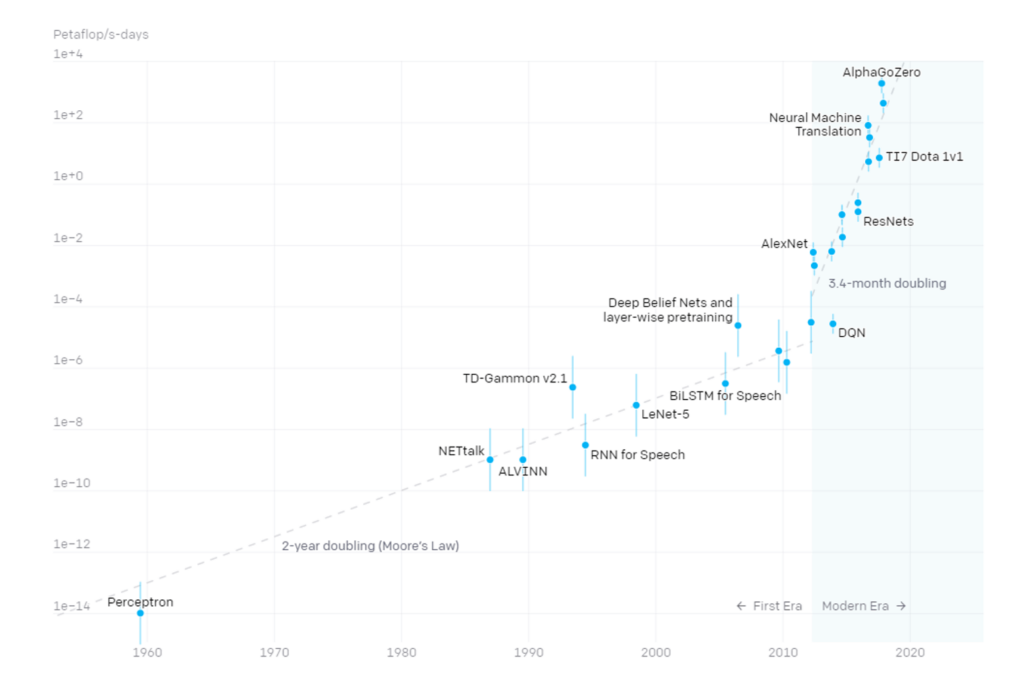

While ML has been around for decades, over the last eight to ten years, ML model complexity has far outpaced Moore’s law bound advancements in a single compute node. Figure 4 depicts a historical compilation of the compute requirements of training AI systems showing that ML complexity is doubling approximately every 3.4 months, compared with Moore’s law of doubling of transistor count in ICs every two years!

Not surprisingly, the industry is investing considerable resources and effort in distributing ML workloads over multiple compute units within a server and across multiple servers in a large data center.

800G Ethernet and Compute Express Link® (CXL®) Enable Distributed ML

Distributing ML workloads across multiple compute nodes is a challenging problem. Running massive parallel distributed training over state-of-the-art interconnects from just a few years ago highlights the connectivity bottleneck where 90% of wall time is spent in communication and synchronization overhead. The industry has responded with advances in interconnect technology, both in terms of increased data rates and reduced latencies to drive resource disaggregation and move large amounts of data within and across multiple servers.

Ethernet is the dominant interconnect technology to connect various servers across a data center. A typical data center networking topology connects a variety of servers over copper interconnects for North-South data traffic patterns and optical interconnects for East-West data traffic patterns. While existing 200G (8x25G) Ethernet interconnects are based on 25Gbps NRZ signaling rates, upcoming deployments in 2023 are designed for 800G (8x100G) interconnects based on 100Gbps PAM-4 signaling to quadruple the available interconnect bandwidth across servers.

Unlike Ethernet, there has not been a dominant industry standard cache coherent interface for connectivity within the server. Over the last five years, several standards like CCIX, Gen-Z, OpenCAPI, NVLink, and more recently, Compute Express Link® (CXL®) have outlined cache coherent interconnects for distributing processing of ML workloads. Of these, NVLink is a proprietary standard used largely by Nvidia devices. CXL, on the other hand, has gained wide industry adoption in a short time to emerge as the unified cache coherent server interconnect standard.

CXL defines a scalable high bandwidth (16x32Gbps), low latency (nano seconds) cache coherent interconnect fabric running over the ubiquitous PCI Express® (PCIe®) interconnect. The CXL protocol, first introduced by Intel in 2019, has now established itself as the industry standard to interconnect various processing and memory elements within a server as well as to enable disaggregated composable architectures within a rack.

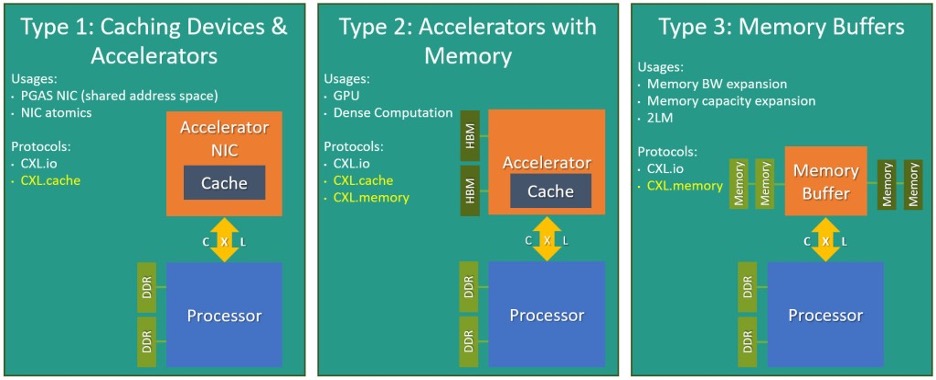

CXL 1.1 defines multiple device types that implement cxl.io, cxl.cache, and cxl.mem protocols. CXL 2.0, introduced in 2020 by the CXL Consortium, adds capabilities for a switching fabric for fanout and extended support for memory pooling for increased capacity and bandwidth. CXL 3.0, currently in development, will further enable peer-to-peer connectivity and even higher throughput when combined with PCIe 6.0.

Intelligent Cloud Connectivity Solutions

Over the last three years, Astera Labs introduced a portfolio of CXL, PCIe®, and Ethernet connectivity solutions to remove performance bottlenecks created by ML workloads in complex heterogeneous topologies. Astera’s industry leading products, address the challenges of connectivity and resource sharing within and across servers. These solutions, in the form of ICs and boards, are purpose-built for the cloud and offer the industry’s highest performance, broad interoperation, deep diagnostics, and cloud-scale fleet management features.

Connectivity Solutions

- Aries Smart DSP Retimers: First introduced in 2019 alongside CXL 1.1 standard, the Aries portfolio of PCIe 4.0/5.0, and CXL 2.0 Smart Retimers overcome challenging signal integrity issues while delivering sub-10ns latency and robust interoperation.

- Taurus Smart Cable Module™ (Taurus SCM™): Taurus SCMs enable Ethernet connectivity at 200GbE/400GbE/800GbE over a thin copper cable while providing a robust supply chain necessary for cloud-scale deployments.

Memory Accelerator Solutions

- Leo CXL Smart Memory Controllers: Industry’s first CXL SoC solution implementing cxl.mem protocol, the Leo CXL Smart Memory Controller, allows a CPU to access and manage CXL-attached DRAM and persistent memory, enabling the efficient utilization of centralized memory resources at scale without impacting performance.

Conclusions

As our appetite for creating and consuming massive amounts of data continues to grow, so too will our need for increased cloud capacity to store and analyze this data. Additionally, the server connectivity backbone for data center infrastructure needs to evolve as complex AI and ML workloads become mainstream in the cloud. Astera Labs and our expanding portfolio of CXL, PCIe, and Ethernet connectivity solutions are essential to unlock the higher bandwidth, lower latencies, and deeper system insights needed to overcome performance bottlenecks holding back data-centric systems.