Learn how Scorpio P-Series Fabric Switches maximize GPU utilization with unparalleled performance, reliability, and scale

As AI infrastructure advances, the need for purpose-built connectivity becomes more pressing. General-purpose switches, initially designed for a wide range of applications, struggle to meet the demands of high-performance AI workloads, which require reliable, efficient, and high-bandwidth connections. They are also burdened by unnecessary features and complexity, making them inefficient and cumbersome for AI-specific deployments.

Astera Labs’ Scorpio P-Series Fabric Switch – the industry’s first PCIe® 6 switch – is specifically designed for AI head-node connectivity, prioritizing power efficiency, signal integrity, and maximum performance per watt. This blog explores how the Scorpio P-Series stacks up against general-purpose switches and why it’s the ideal choice for next-generation AI infrastructure.

Energy Efficient Fabric Switching for GPUs and AI Accelerators

PCI Express® (PCIe) switches used in AI servers today need to provide a high bandwidth, low latency path from the CPU, network, and storage to the GPU or accelerator memory. The data that flows from these sources into the GPU memory is called data ingestion. In parallel, the PCIe switch is required to provide data out from the GPU to the scale-out network via the network interface card (NIC).

Modern GPUs and accelerators are tuned to utilize every watt of power available to them from the platform. Hyperscalers need a solution that is uncompromised on performance and can optimize operations to return as much power as possible from the interconnect system to these GPUs and accelerators to increase their token generation rate. General-purpose compute and storage architectures utilize a high number of low-lane count PCIe peripheral devices, such as SSDs, lower-speed NICs, and I/O cards. In addition, general-purpose switches are designed with many PCIe port controllers to support these peripheral devices, adding complexity and higher power consumption.

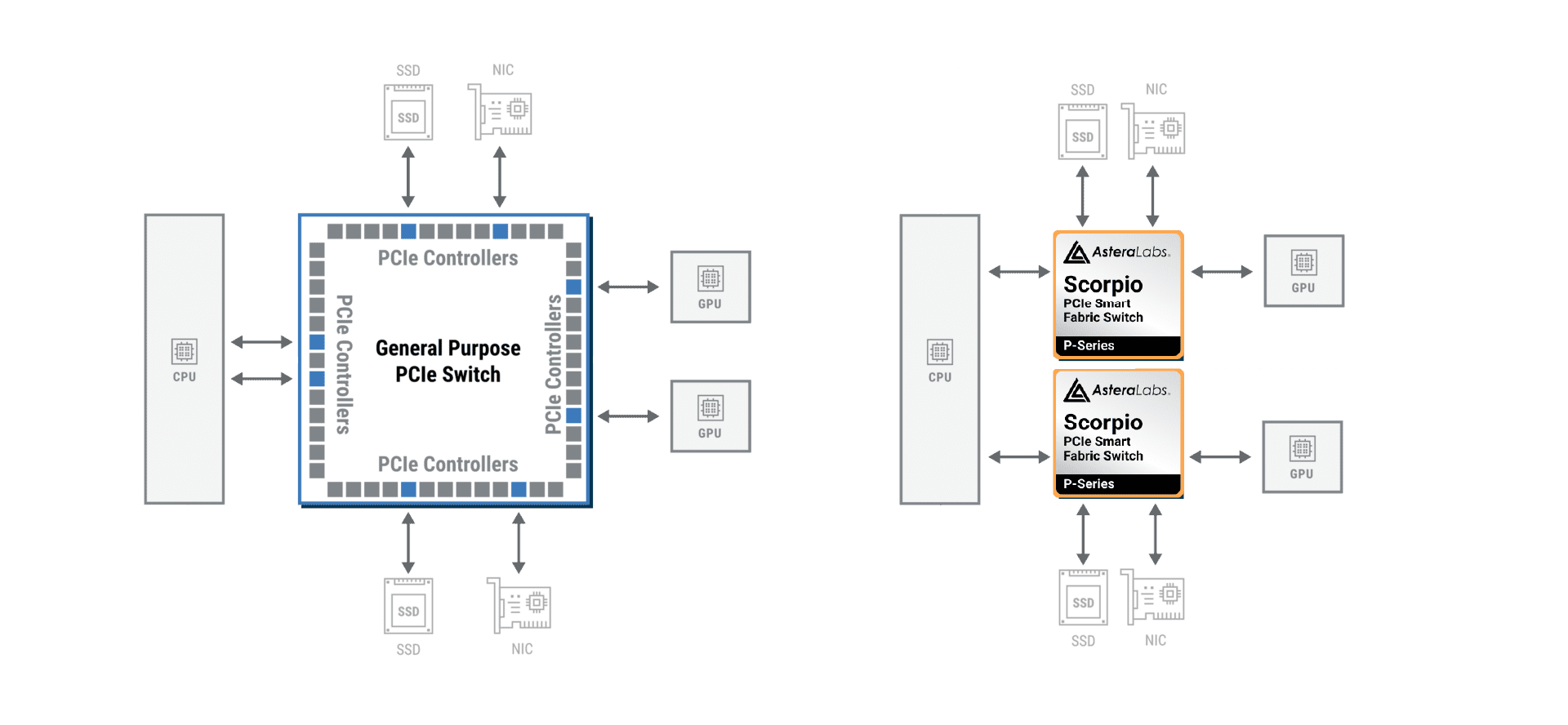

Figure 1 below shows that the AI workload grossly underutilizes the PCIe port controllers available in this switch device.

Figure 1: PCIe Controllers in General Purpose Switches and Scorpio P-Series

Scorpio P-Series Fabric Switches are built for AI head-node connectivity and are architected for mixed traffic GPU-CPU/NIC/SSD workloads. In addition, Figure 1 above shows how Scorpio’s PCIe port controller optimization enables full utilization of the PCIe port controllers for the application. With high performance for AI workloads and low power consumption through full controller utilization, Scorpio P-Series delivers efficient fabric connectivity that delivers unparalleled performance per watt.

Predictable Performance for Increased GPU Utilization

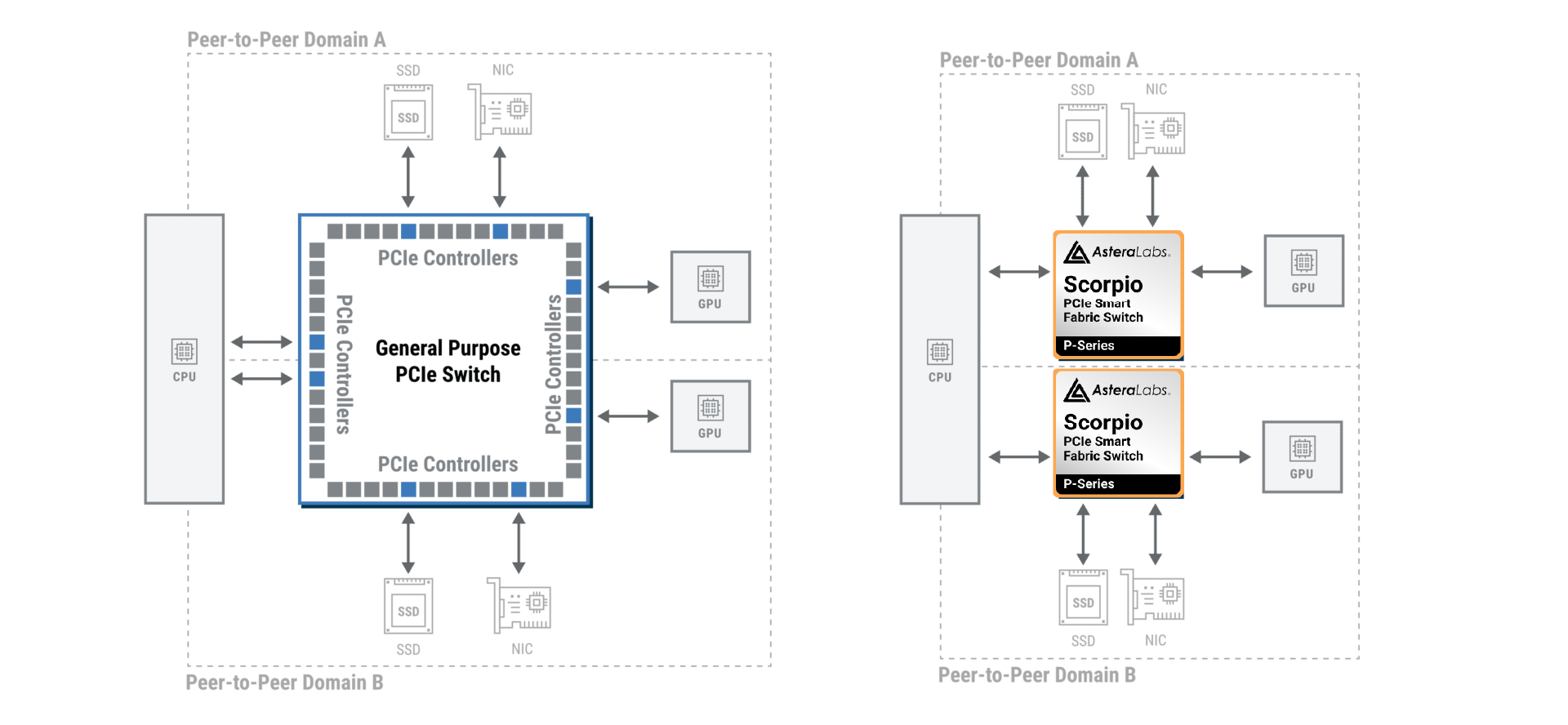

General purpose switches are designed to address a wide number of applications and use software (or synthetic) partitioning to virtualize one large switch into several smaller switches. This type of partitioning was originally designed for and is commonly used in storage applications but has a negative impact on high performance AI workloads. In Figure 2 below, you can see a larger general-purpose switch soft partitioned into two PCIe domains with two GPUs.

Figure 2: Creating partitions in software in a singular switch core vs. Scorpio P-Series atomic ingest/scale-out resource pools

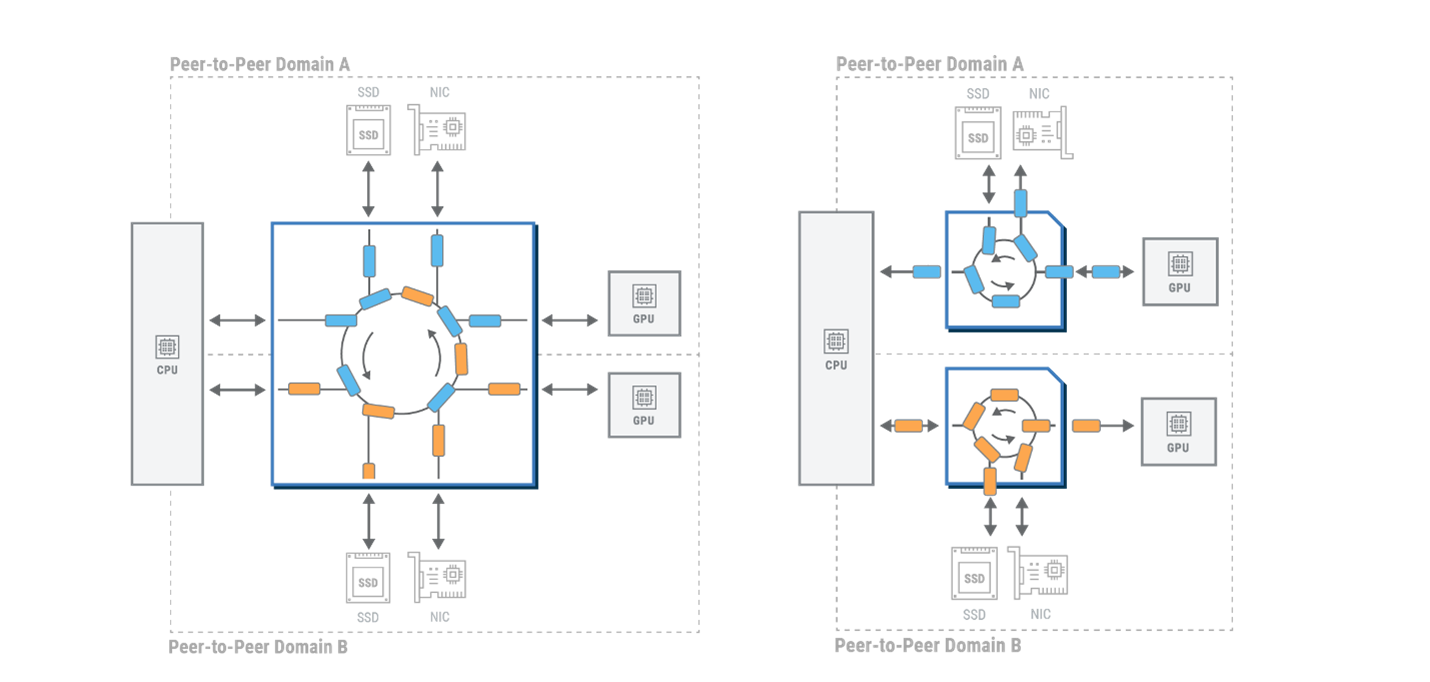

While the AI data flow for each GPU is only within each peer-to-peer domain, both GPUs and all 8 link partners are forced to compete for the same scarce resource: the switch core. The result is decreased GPU utilization due to increased risk of data starvation and inconsistent traffic flow to and from the GPU as illustrated in Figure 3 below.

Figure 3: Impact of a shared switch core (left) and alleviation of noisy neighbor with connectivity islands (right)

Scorpio P-Series Fabric Switches are separate modular devices that create an atomic unit of data ingest and scale-out composed of a GPU/Accelerator and its associated Compute, Network, and Storage. These atomic units can run completely independently with zero impact to other atomic units. Each Scorpio P-Series Fabric Switch dedicates ingest and scale-out resources to each GPU, as shown in Figure 3 above. This avoids the contention risk of a neighboring GPU consuming an uneven proportion of the switch core and delivers maximum predictable performance with increased efficiency and GPU utilization.

Conclusion

In the rapidly evolving landscape of AI infrastructure, purpose-built solutions are essential to optimize both performance and efficiency. Astera Labs’ Scorpio P-Series Fabric Switches redefine fabric switching by addressing the unique needs of AI workloads—from dedicated ports for data ingestion to modular atomic units of connectivity. Scorpio P-Series not only meets the high-performance demands of AI but also ensures reliability and scalability. As the industry’s first PCIe 6 Switch, Scorpio P-Series is ready to accelerate the deployment of next-gen AI data centers and empower the future of AI-driven technology.