In today’s rapidly evolving AI landscape, hyperscale data centers are under immense pressure to keep up with the processing demands for AI data flows that power Generative AI applications. AI models are moving at rapid pace and scale with four major LLMs on track for release in Q4, 2024 while models, such as Gemini 1.5 Pro-002, are weighing in at 1.5 trillion parameters with others to follow [1]. As a reference, OpenAI released ChatGPT based on the GPT-3 architecture in 2022, which utilized a model with 175 billion parameters.

Next-generation GPUs and AI accelerators are evolving rapidly to satisfy this intense demand for computational capacity with increased efficiency. They are doubling IO bandwidth every generation with new platforms being deployed in a demanding annual upgrade cycle.

Fabric Switches for the AI Era

Astera Labs’ Scorpio Smart Fabric Switch portfolio is purpose-built to enable hyperscalers to deploy cloud-scale AI platforms at unprecedented pace and scale:

- Improves energy efficiency with maximum performance per watt fabric solutions

- Increases GPU utilization with predictable, secure, and high-performance data flows

- Maximizes uptime with COSMOS delivering unprecedented data center observability

- Reduces time to market with flexible software-defined architecture and seamless interoperability

The Scorpio portfolio features two application-specific product lines – Scorpio P-Series and Scorpio X-Series – built from the ground up for AI servers and AI clusters.

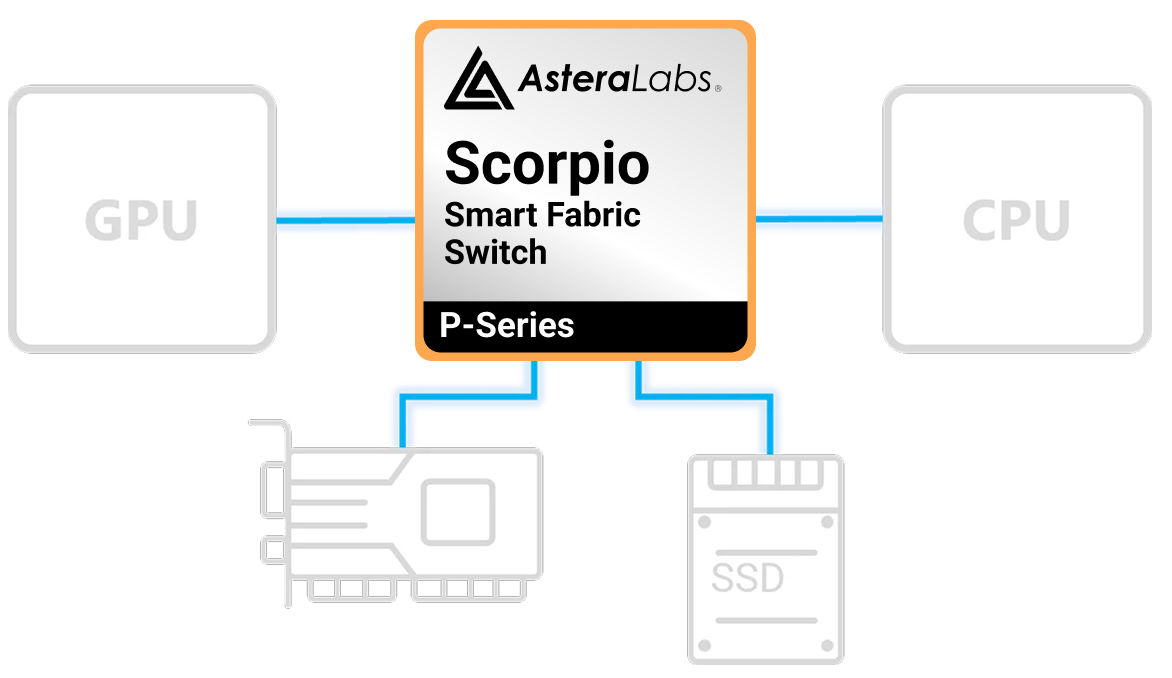

Scorpio P-Series Fabric Switches, the industry’s first to support PCIe 6, are architected for mixed traffic head-node connectivity for data ingest across a diverse ecosystem of PCIe hosts and endpoints.

Figure 1: Scorpio P-Series Fabric Switches

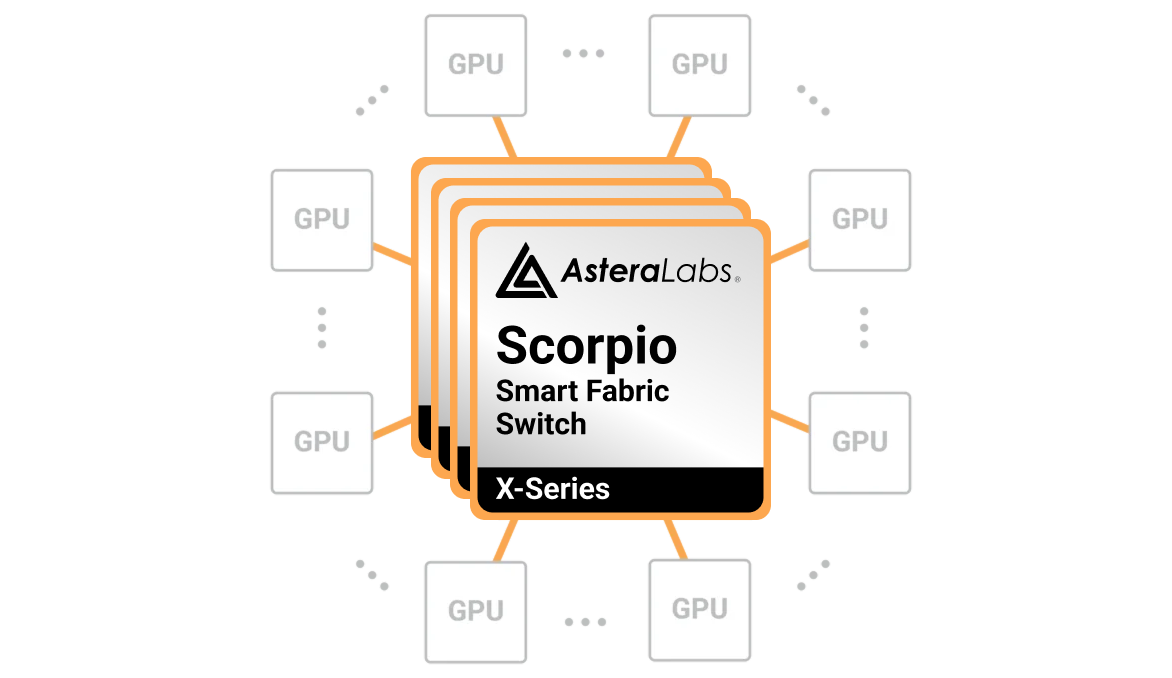

Scorpio X-Series Fabric Switches for scale-up clusters are architected to deliver the highest backend GPU-to-GPU bandwidth while harnessing software-defined architecture for platform- specific customization.

Figure 2: Scorpio X-Series Fabric Switches

Scorpio Solves Key Challenges for Next-Gen AI Infrastructure

The Scorpio Smart Fabric Switch portfolio is built from the ground up to address several critical challenges with next-generation AI infrastructure deployments:

General purpose switches are not optimized for AI workloads.

PCI Express® (PCIe®) switches used in AI servers today need to provide a high bandwidth, low latency path from the CPU, network, and storage to GPU or accelerator memory. The data that flows from these sources into the GPU memory is called data ingestion. In parallel, the PCIe switch is required to provide data out from the GPU to the scale-out network via the network interface card.

Modern GPUs and accelerators are tuned to utilize every watt of power available to them from the platform. Hyperscalers need energy-efficient connectivity solutions that deliver uncompromised performance and can simultaneously return as much power to the accelerator/GPU.

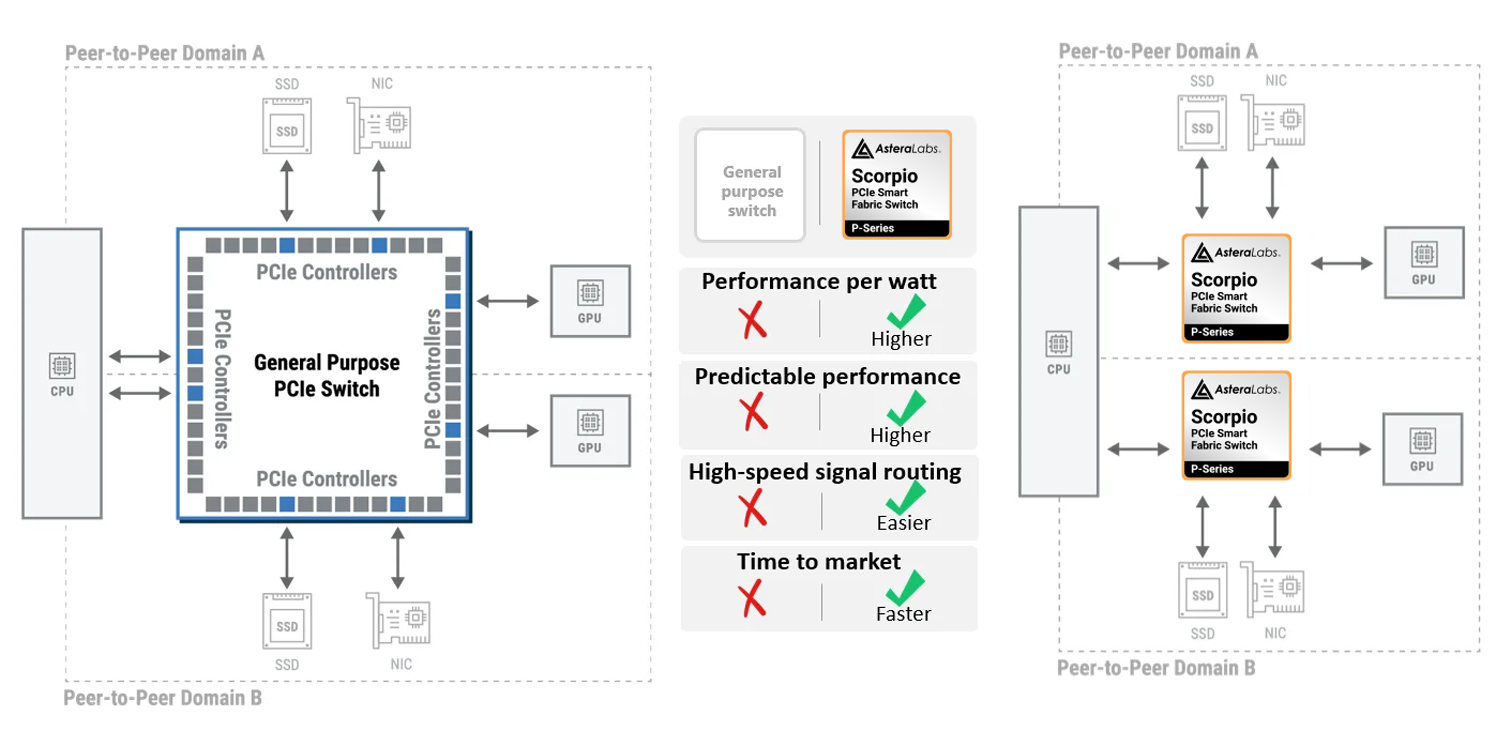

General purpose switches widely deployed today are burdened with several challenges (as shown in Figure 3):

- Grossly underutilize the PCIe port controllers in the device resulting in higher power

- Share the same switch core with multiple GPUs leading to unpredictable performance

- Complex routing on the board as a result of funneling high-speed signals to a larger device, creating signal integrity issues

- Requires longer system development cycles, reducing time-to-market

Figure 3: General purpose switches vs Scorpio P-Series Fabric Switch built for AI workloads.

Scorpio P-Series Fabric Switches are built specifically for AI head node connectivity and architected for mixed traffic GPU-CPU/NIC/SSD workloads. Figure 3 above shows how the Scorpio P-Series solution provides:

- Higher performance per watt by optimizing the controllers for AI workloads

- Predictable performance by providing dedicated switching hardware

- Modular GPU-aligned design providing easier high-speed signal routing, lower crosstalk, and better signal integrity

- Purpose-built for AI workloads to deliver peak performance and faster time-to-market

An explosion in GPUs and accelerators with unique needs for scale-up.

As the industry expands from training-centric data center buildouts towards inference-centric buildouts that range from small, medium, to large scale, we are seeing an increase in custom accelerators built to serve specialized AI workloads. These solutions, often developed by hyperscalers, are homogeneous and present significant opportunities to innovate on connectivity for scale-up.

Scorpio X-Series Fabric Switches are purpose-built to deliver the highest back-end GPU-to-GPU bandwidth and support platform-specific customizations through its software-defined architecture. Innovations for protocol enhancements, bandwidth and latency tuning, and expanded telemetry features deliver optimizations to reliably scale homogeneous GPU or accelerator fabrics to provide the best direct user experience for real-time insights and maximize uptime to increase return-on-investment for massive AI training and inference buildouts.

Astera Labs’ software-defined architecture enables an open fabric ecosystem while simultaneously enabling customized homogeneous GPU clusters for application-specific AI workloads.

Connectivity issues have a larger impact on AI workloads as clusters scale across racks.

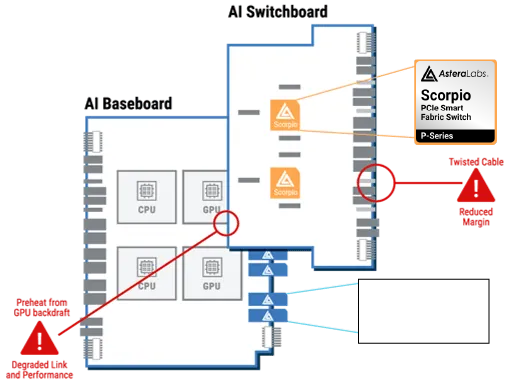

As the industry continues scaling GPU and accelerator clusters from multiple devices on a board, to multiple sleds in a rack, to multiple racks in a data center, there is an increasing need to contain the blast radius of a failure. Clusters of accelerators running a job that are impacted by a component or connectivity failure need to restart, so pinpointing the exact location of failure is increasingly critical to minimize downtime. In Figure 4 below, a few common causes of failure are highlighted.

Figure 4: Example data center drivers to failure (heat, cable issue)

Aries PCIe/CXL Smart DSP Retimers have been deployed for years by hyperscalers to help identify issues like these to prevent failure and ensure uptime. When Scorpio Smart Fabric Switches are deployed into these same data centers, Scorpio and Aries work hand-in-hand to provide a deeper protocol layer of debug, diagnostics, and telemetry. Combined, Scorpio and Aries can provide unprecedented datacenter observability driving a huge amount of telemetry that can be used to identify connectivity issues, dispatch help to re-seat cables, and even predict failures before they occur to maximize server utilization and uptime.

Expanding Astera Labs’ Intelligent Connectivity Platform

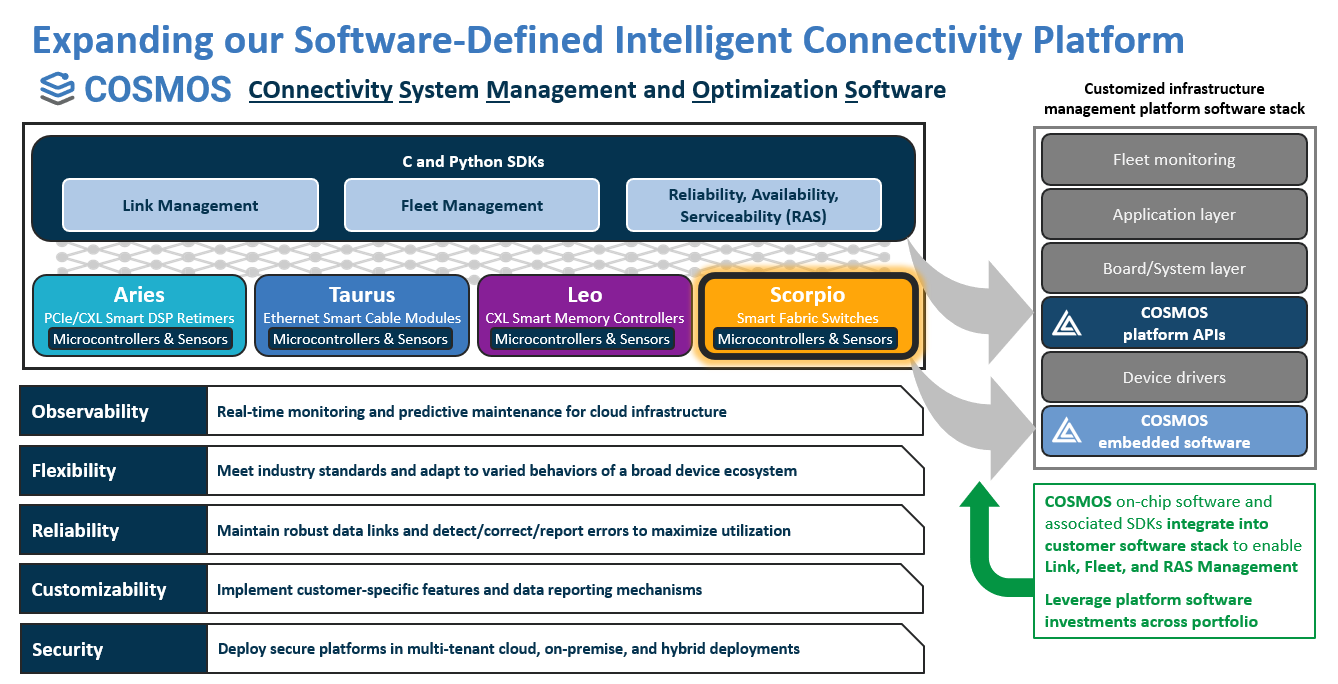

Driven by the growth of AI workloads at scale, Astera Labs devised its software-defined Intelligent Connectivity Platform, comprised of high-speed ICs, modules and boards and COSMOS – COnnectivity System Management and Optimization Software.

Scorpio Smart Fabric Switches expand the Intelligent Connectivity Platform with new ICs and boards. Built on the COSMOS software suite already integrated into customer deployments, Scorpio delivers unprecedented data center observability, enhanced security, and extensive fleet management capabilities.

When paired with Astera Labs’ Aries PCIe/CXL Smart DSP Retimers deployed in a system, Scorpio delivers unmatched insights into the state and health of AI servers. COSMOS is natively supported by Scorpio Smart Fabric Switches and deployments with COSMOS for Aries Smart DSP Retimers can seamlessly deploy fleet management on Scorpio Smart Fabric Switches without additional software development. By leveraging unified diagnostics across both devices in a link, customers can identify and repair degraded links, while ensuring maximum uptime and availability.

Scorpio Smart Fabric switches protect multi-tenanted systems with our confidential compute support via leading security standards such as OCP, NIST, FIPS, etc. Designed to protect Data-In-Transit, Data-In-Use & Data-At-Rest. OCP SAFE certified immutable code supporting Secure Boot authentication & chain of trust for all firmware layers.

Learn more: Request the white paper today

Request the How Your Hyperscale Data Center Can Overcome the Challenges of Next-Gen AI Infrastructure with Smart Fabric Switches white paper today to learn how Scorpio is ready to propel the deployment of next-generation AI servers and clusters at cloud-scale.

Citations: